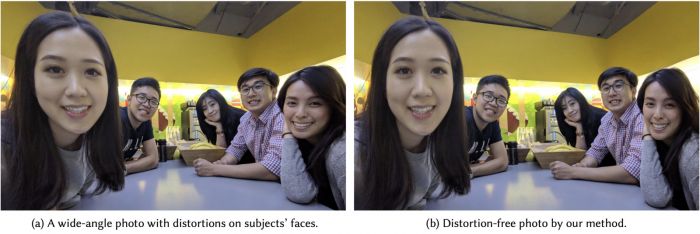

We’ve all done it. That moment when you’re asked to take a huge group photo with your wide-angle lens and you’re pushing it to the limits with people from corner to corner. We all know that while we “got the shot” the people on the outer edges are going to come out stretched and distorted. Now with smart phones getting even wider lenses build into them along with better add on lenses from companies like Moment, we’re going to see this distortion even more in group photos.

This unnatural stretching, squishing, and/or skewing is an effect that is known as anamorphosis. and you can see it in the corners and edges of nearly any wide angle photograph that exists. Thankfully, a group of researchers have found an efficient way to address this.

The researchers from Google & MIT led by YiChang Shih have found an efficient way of dealing with the distortion. In their paper titled “Distortion-Free Wide-Angle Portraits on Camera Phones,” they describe an algorithm that is capable of correcting the effect, making for more natural selfies and wide-angle portraits. This new method bypasses this by using a content-aware warping mesh and applying corrections only to the part of the frame where faces are detected. Thus, maintaining a smooth transition between the faces on the edges and the rest of the image.

Given a portrait as an input, we formulate an optimization problem to create a content-aware warping mesh which locally adapts to the stereographic projection on facial regions, and seamlessly evolves to the perspective projection over the background. Our new energy function performs effectively and reliably for a large group of subjects in the photo. The proposed algorithm is fully automatic and operates at an interactive rate on the mobile platform. We demonstrate promising results on a wide range of FOVs from 70° to 120°.

Here Are Some Sample Images From Their Before & After With The Optimized Meshes

To view their entire gallery of comparison images click here

The researchers say good results were achieved on photos with a wide field-of-view ranging from 70° to 120° and the algorithm is fast enough to work “at an interactive rate” on a smart phone. More information is available on the project website, but i’d expect to see this feature built into Google/Android phones in the near future.

What are your thoughts? Have you noticed any distortion in your smart phone images? Would you be excited for this feature? Given i’ve not done many selfies, personally I am interested to see how this works for landscape images but i’m still pumped to see this effect in action in the real world.

*Photos & Video shared with permission from the creators. Do not share these photos or video without direct written permission.

Get Connected!