Article Overview

In this article, we are going to test Lightroom 4 and Lightroom 5 performance and hardware configurations. Our goal, to figure out the optimal hardware configuration for Lightroom workstations while also testing the performance difference from Lightroom 4 to Lightroom 5.

Introduction

Thanks to the awesome guys and gals at Newegg, we recently had the opportunity to co-build a high-end custom dual-Intel Xeon workstation for image and video post-production work. The new machine also gives us a chance to test it against our other high-performance Intel i7 machine to see whether Adobe Lightroom can take advantage of a dual-CPU computer as well as to test the difference in performance within Adobe Premiere.

Watch the Video of the Lightroom Performance Testing

Lightroom Hardware Test Early Conclusion

For those of you lazy folk that aren’t interested in reading all the details of our tests, here is the “early conclusion.”

Hard Drive & Catalog Setups

From our tests, we didn’t note a significant difference in hard drive and catalog configurations. As long as you are working off of an internal SSD or a high-speed mechanical hard drive, whether you put the catalog and cache on the operating system drive, or a secondary SSD/high-speed mechanical data drive, it really won’t make a difference in Lightroom performance.

However, we did conclude that you are always better off using the fastest hard drive, even if that drive is also the operating system drive.

Single vs Dual CPU

Adobe has never claimed within their recommended hardware specifications that having two CPU processors in a computer can improve the performance in Lightroom. Before the test, we suspected from past experience that a single CPU with a higher individual clock speed would be faster than dual CPUs with a comparable combined clock speed. However, we wanted to get hard evidence to support this assumption.

Based on our test with both Lightroom 4 and 5, there is indeed no performance gain from having two CPUs. In fact, our single core machine with a faster individual clock speed significantly beat out dual CPU setups with similar combined clock speeds.

To conclude, as of right now with Adobe Lightroom 4 and Adobe Lightroom 5, you will get the best performance out of a single high-speed processor. Overclocking is also a valid option for advanced users to boost Lightroom performance.

Lightroom 4 vs Lightroom 5 Performance

Adobe Lightroom 4 is indeed faster than Lightroom 5 in all tests. Lightroom 5 was slower across the board from around 10-20% in comparison to Lightroom 4 when rendering 1:1 Previews, exporting and image-to-image Develop Module lag.

Smart vs 1:1 Previews

Finally, we unfortunately discovered that using any combination of using Smart Preview and 1:1 Preview does not consistently result in faster performance. Meaning we couldn’t conclude that Rendering 1:1 Previews and Smart Previews together was actually faster than rendering 1:1 or Smart Previews alone.

For details on all testing procedures and results, please continue reading.

Test Machine Specifications: THOR vs. HULK

For our tests we are taking our Dual Xeon computer, dubbed HULK, and pitting it against our current performance king, THOR (and yes, we do like to name our high-end machines after Marvel Characters).

We suspect that because Lightroom has not been optimized for dual CPUs, the faster single Intel i7 processor in THOR will produce faster results when compared to the slower Dual Xeon setup in HULK. We will also be testing THOR in normal mode (CPU at 3.2Ghz) and overclocked mode (CPU at 4.3Ghz).

Here are the primary component specifications for each machine:

THOR Specifications

- Case: Rosewill THOR v2 ATX Full Tower Computer Case

- Motherboard: ASUS Sabertooth X79 LGA 2011 ATX Intel Motherboard

- Processor: Intel i7-3930K processor @ 3.2Ghz standard (overclocked @ 4.3Ghz)

- Graphic Card: EVGA GeForce GTX 680 with 2GB vide memory

- RAM: 32GB Mushkin memory

- Hard Drives: 512GB Samsung 840 SSD for OS and 4TB Western Digital Black for data

- Operating System: Microsoft Windows 8 Professional 64-bit (Full Version)

HULK Specifications

- Case: Rosewill BLACKHAWK ATX Mid Tower Computer Case

- Motherboard: ASUS Z9PE-D8 WS Dual LGA 2011 Intel Motherboard

- Processor: Intel Xeon E5-2650 @ 2Ghz per CPU

- Graphic Card: PNY Quadro 4000 Workstation Video Card

- RAM: 64GB PNY memory (8x 8GB)

- Hard Drives: PNY Prevail Elite 480GB SSD for OS and another 512GB PNY SSD for data

- Operating System: Microsoft Windows 8 Professional 64-bit (Full Version)

Lightroom Performance Testing Procedures

For all of our Lightroom tests, we created a single “Test Catalog” for Lightroom 4 and Lightroom 5 with the exact same Develop Preset applied to each image from the SLR Lounge Lightroom Presets. Our Test Catalogs for both Lightroom 4 and Lightroom 5 were uploaded unaltered to a single external drive, and then loaded from scratch onto each machine just prior to testing. Between all tests, we would clear any cache and previews to ensure accuracy.

Hard Drive Configuration Testing Procedures

There is a lot of speculation out there when it comes to to hard drive configurations in regards to the Lightroom catalog and cache. From our own experience, we haven’t noticed much of a difference regardless of the setup, so we wanted to put that theory to test.

With each computer and CPU configuration, we ran 4 different test setups for both Lightroom 4 and Lightroom 5 using different hard drive and catalog configurations. Here are the different catalog and cache configurations.

Config 1 – Catalog and Cache on OS HD

Config 2 – Catalog on OS HD, Cache on Secondary Data HD

Config 3 – Catalog and Cache on Secondary Data HD

Config 4 – Catalog on Secondary Data HD, and Cache on OS HD

Hard Drive Configuration Testing Results

From all of our configuration testing, we noted that there was less than a 1% difference in testing results regardless of the hard drive configurations. In addition, the fastest variant (by only 1%) was always to simply use the Operating System SSD in both machines both for Lightroom 4 and Lightroom 5.

For this reason, we are only using times from Configuration 1 in the all test result data below. We can conclude that as long as you are using an internal high-speed hard drive, the difference in performance is negligible. However, we should also note that Lightroom will always run slightly better when the catalog and cache is on the fastest drive available, even if that drive is also the operating system drive. Splitting the catalog and cache did not improve performance even when both drives were SSDs.

Test 1: Lightroom 4 1:1 Preview Rendering Results

Our first test consisted of simply measuring the amount of time it takes to render the 1:1 Previews for all catalog images within the Test Catalog. As expected, the faster processor speed of THOR in both normal and overclocked modes resulted in much faster times than the 2Ghz Dual CPU HULK. In fact, when overclocked the single-processor THOR is around 40% faster than the Dual Xeon HULK.

- Dual Xeon HULK: 100.5 seconds

- Normal THOR: 73.3 seconds

- Overclocked THOR: 60.1 seconds

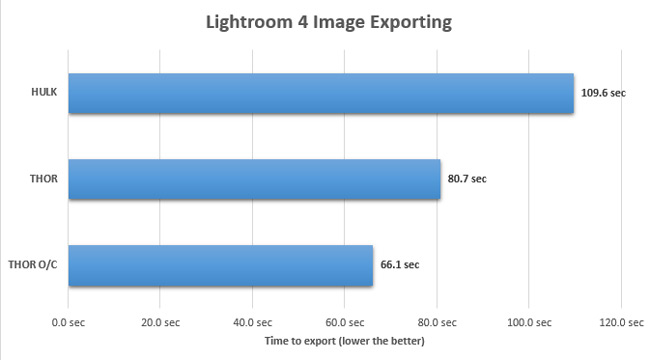

Test 2: Lightroom 4 Image Export

With the same develop presets applied to all images, we ran identical exports on all machines to test overall export times. Just like the 1:1 Rendering test, THOR in both normal and overclocked modes outperformed the Dual Xeon HULK machine.

- Dual Xeon HULK: 109.6 seconds

- Normal THOR: 80.7 seconds

- Overclocked THOR: 66.1 seconds

Test 3: Lightroom 5 1:1 Preview Rendering

Afterwards, we moved to our Adobe Lightroom 5 testing. The graphics below will show Across the board, the 1:1 Rendering time on Lightroom 5 is indeed slower than Lightroom 4 regardless of CPU and hard drive/catalog configurations.

- Dual Xeon HULK: 114.3 seconds

- Normal THOR: 79.1 seconds

- Overclocked THOR: 63.1 seconds

Test 4: Lightroom 5 Image Export

Lastly, our Lightroom 5 image export test results indicated a similar performance decline when compared to Lightroom 4. We also noted that there was no additional optimization allowing Lightroom 5 to better utilize Dual CPUs over Lightroom 4.

- Dual Xeon HULK: 127.4 seconds

- Normal THOR: 86.3 seconds

- Overclocked THOR: 71.6 seconds

Single vs. Dual CPU Conclusion

Unfortunately, like Adobe Lightroom 4, Adobe Lightroom 5 is simply not configured to utilize dual CPUs.

There are many good reasons to upgrade to Lightroom 5, but outright speed is not one of them. Regardless your hardware configuration, going from Lightroom 4 to Lightroom 5 will result in marginally slower performance in both 1:1 Preview rendering and in exporting images.

Image-to-Image Develop Module Lag Testing

For each machine, CPU and hard-drive configuration we attempted to test the image-to-image lag that became noticeable with Adobe Lightroom 4, and now Lightroom 5.

We wanted to test Lightroom 5 to see if there were improvements, especially since Lightroom 5 now features 1:1 and Smart Previews. To test, we timed image-to-image Develop Module lag within each catalog after rendering the following previews for each machine/configuration:

Lightroom 4 – 1:1 Previews

Lightroom 5 – 1:1 Previews alone

Lightroom 5 – 1:1 Preview + Smart Previews

Lightroom 5 – Smart Preview alone

We ran each test 3 times, clearing all cache and preview data in-between test to verify test accuracy.

Image-to-Image Develop Module Lag Results

We had initially suspected that Lightroom 5, with it’s 1:1 and Smart Preview functionality, would be able to outperform Lightroom 4 in image-to-image Develop Module lag.

Unfortunately, not only did it not out perform Lightroom 4, the results were actually quite surprising. Overall, we found that Lightroom 5’s image to image lag was between 10-20% slower than Lightroom 4 regardless of the system, CPU, and hard drive configuration.

What was even more surprising was the immediate inconsistency in test results. Each test was run 3 times and at times, smart previews alone were quicker, at times 1:1 previews alone were quicker, and at other times the expected 1:1 + smart previews were the quickest. Either way, we were unable to generate consistent and usable numbers to determine Lightroom 5’s optimal preview settings configuration.

The only consistent factor we found was that despite rendering Smart and 1:1 Previews, Lightroom 5’s image-to-image Develop Module speed was at least 10% slower than Lightroom 4, and at some points it was up to 20% slower.

Conclusion

We had high hopes for Lightroom 5. We hoped that Adobe would give Lightroom 5 the ability to utilize additional system resources for greater speeds. What we found was that Lightroom 5 still under-utilizes system resources. On average, regardless of the system build, CPU, and CPU clock settings, Lightroom was only utilizing around 30-60% of the CPU. It was only during exporting that utilization reached 100%. We were hoping Lightroom 5 would feature some sort of pre-cache functionality that would enable Lightroom 5 to have better image-to-image Develop Module lag. Unfortunately, it is moderately slower in image-to-image lag as well.

This means that for Lightroom 4 and Lightroom 5 we are left with the same conclusion. Once you have an SSD to work off of, more than the recommended 4GB of ram, the only thing that is going to boost speeds is a faster single core overclocked processor.

We are going to test these machines in Adobe Premiere CC as well, and then we will be going back to Newegg to build out our dream machines for Adobe Lightroom still editing and Premiere video editing.

I hope you all enjoyed this article, feel free to comment and leave your suggestions below.

Get Connected!